Robots.txt is a file that tells search engines which areas of your website to index. What is its role exactly? How to create the robots.txt file? And how to use it for your SEO?

What is the robots.txt file?

The robots.txt is a text file, its placement is at the root of your website. It prohibits search engine robots from indexing certain areas of your website. The robots.txt file is one of the first files analyzed by spiders (robots).

What is it used for ?

The robots.txt file gives instructions to search engine robots that analyze your website, it is a robot exclusion protocol. Thanks to this file, you can prohibit the exploration and indexing of:

- your site to certain robots (also called “agents” or “spiders”),

- certain pages of your site to robots and/or some pages to certain robots.

To fully understand the interest of the robots.txt file, we can take the example of a site composed of a public area to communicate with customers and an intranet reserved for employees. In this case, the public area is accessible to robots and the private area is prohibited.

This file also tells the engines the address of the sitemap file of the website.

Une balise Meta nommée « robots » placée dans le code html d’une page web interdit son indexation avec la syntaxe suivante : <meta name= »robots » content= »noindex »>.

Where can I find the ROBOTS.TXT file?

The robots.txt file is located at the root level of your website. To check its presence on your site, you type in the address bar of your browser: http://www.yoursiteadress.com/robots.txt.

If the file is:

- present, it will be displayed and the robots will follow the instructions present in the file.

- absent, a 404 error will be displayed and the robots will consider that no content is prohibited.

A website contains only one file for robots and its name must be exact and in lowercase (robots.txt).

How to create it?

To create your robots.txt file, you need to be able to access the root of your domain.

The robots TXT file is created manually or generated by default by the majority of CMS like WordPress when they are installed. But it is also possible to create your file for robots with online tools.

For manual creation, you use a simple text editor such as Notepad while respecting both:

- a syntax and instructions,

- a file name: robots.txt,

- a structure: one instruction per line and no blank lines.

To access the root folder of your website, you must have FTP access. If you do not have this access, you will not be able to create it and you will have to contact your host or your web agency.

The syntax and instructions of the robots.txt file

The robots.txt files use the following instructions or commands:

- User-agent: user-agents are search engine robots, for example Googlebot for Google or Bingbot for Bing.

- Disallow: disallow is the instruction that prohibits user-agents from accessing a URL or a folder.

- Allow: allow is an instruction authorizing access to a URL placed in a prohibited folder.

Example robots.txt file:

file for the robots of the site http://www.adressedevotresite.com/

- User-Agent: * (allow access to all bots)

- Disallow: /intranet/ (prohibits exploration of the intranet folder)

- Disallow: /login.php (prohibits exploration of the url http://www.adressedevotresite.com/login.php)

- Allow: /.css? (allow access to all css resources)

- Sitemap: http://www.adressedevotresite.com/sitemap_index.xml (link to sitemap for SEO)

In the example above, the command User-agent applies to all crawlers thanks to the insertion of an asterisk (*). The hash mark (#) is used to display comments, comments are not taken into account by robots.

You will find on the robots-txt site, resources specific to certain search engines and certain CMS.

robots.txt and SEO

In terms of optimizing the SEO of your website, the robots.txt file allows you to:

- prevent robots from indexing duplicate content,

- provide the sitemap to the robots to provide indications on the URLs to index,

- save the “crawl budget” of Google robots by excluding low quality pages from your website.

How to edit robot.txt on Shopify ?

Edit robots.txt.liquid

If you want to edit the robots.txt.liquid file, then you should work with a Shopify Expert or have expertise in code edits and SEO.

You can use Liquid to add or remove directives from the robots.txt.liquid template. This method preserves Shopify’s ability to keep the file updated automatically in the future, and is recommended. For a full guide on editing this file, refer to Shopify’s Developer page Customize robots.txt.liquid.

Remove any previous customizations or workarounds, such as using a third-party service such as Cloudflare, before you edit the robots.txt.liquid file.

Steps:

- From your Shopify admin, click Settings > Apps and sales channels.

- From the Apps and sales channels page, click Online store.

- Click Open sales channel.

- Click Themes.

- Click the … button, and then click Edit Code.

- Click Add a new template, and then select robots.

- Click Create template.

- Make the changes that you want to make to the default template. For more information on liquid variables and common use cases, refer to Shopify’s Developer page Customize robots.txt.liquid.

- Save changes to the robots.txt.liquid file in your published theme.

You can also delete the contents of the template and replace it with plain text rules. This method is strongly not recommended, as rules may become out of date. If you choose this method, then Shopify can’t ensure that SEO best practices are applied to your robots.txt over time, or make changes to the file with future updates.

Note

ThemeKit or command line changes will preserve the robots.txt.liquid file. Uploading a theme from within the Themes section of the Shopify admin will not import robots.txt.liquid.

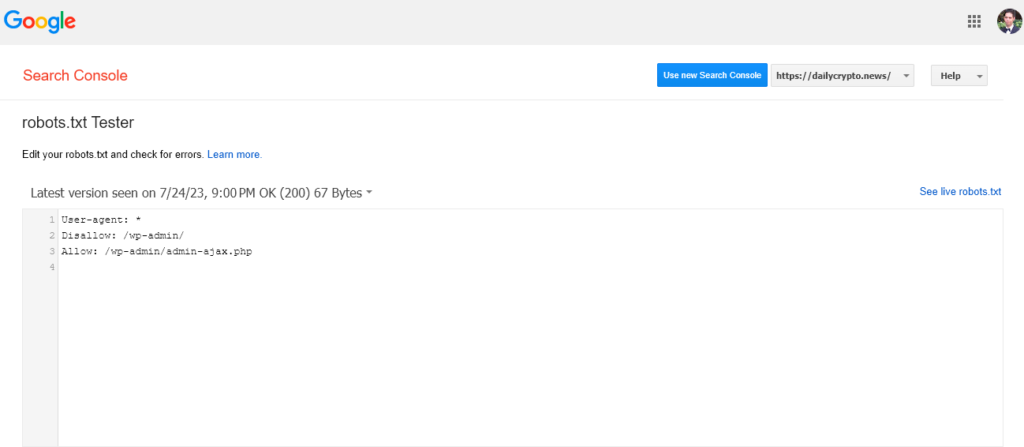

How to test your robots.txt file?

To test your robots.txt file, all you need to do is create and authenticate your site on Google Search Console. Once your account is created, you will need to click in the menu on Exploration and then on Robots.txt file test tool.

Testing the robots.txt file verifies that all important URLs can be indexed by Google.

To conclude, if you want to have control over the indexing of your website, the creation of a robots.txt file is essential. If no file is present, all the urls found by the robots will be indexed and will end up in the search engine results.